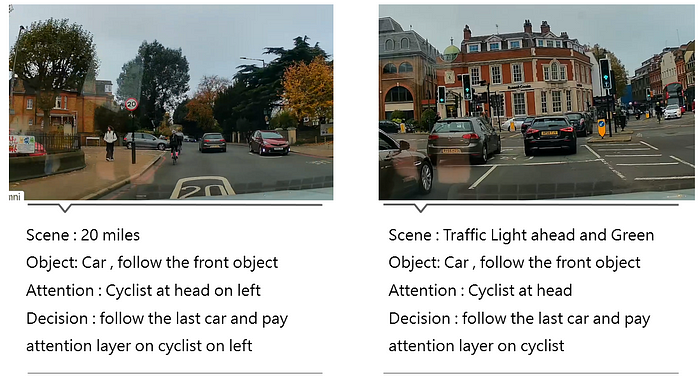

Last month, we introduced HOS 2.0, our end-to-end algorithm that uses camera inputs for object detection, drivable range estimation, and decision-making. We also mentioned our upcoming Vision-Language Model (VLM) integration, designed to generate textual descriptions of road situations for clearer interpretability.

1. Drive-by-Wire System Integration

This December, our main focus was on perfecting the drive-by-wire system for the Citroën AMI. Although the AMI is an electric vehicle (EV), it still relies on purely mechanical controls — making it the perfect testbed for a universal drive-by-wire setup that could be adapted to a wider range of vehicles without built-in computer controls.

Here’s what we’ve achieved so far:

- Steering: We can now precisely control the front wheels using a motor and encoder setup. The steering module reliably moves to the desired angle with minimal latency.

https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fwww.youtube.com%2Fembed%2FcCxIpoP5FzM%3Ffeature%3Doembed&display_name=YouTube&url=https%3A%2F%2Fwww.youtube.com%2Fshorts%2FcCxIpoP5FzM%3Fsi%3Dz6xLKUElBCBzvIE0&image=https%3A%2F%2Fi.ytimg.com%2Fvi%2FcCxIpoP5FzM%2Fhq2.jpg&type=text%2Fhtml&schema=youtube

- Acceleration: We intercept signals from the AMI’s acceleration pedal and manipulate them via a custom PCB and ECU interface. This allows smooth and safe acceleration inputs.

- Braking: We’ve installed an actuator to control brake pedal movement. It works well but requires further tuning to emulate a natural braking feel — speed and force adjustments are next on the to-do list.

https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fwww.youtube.com%2Fembed%2Fa8SQPxR_teM%3Ffeature%3Doembed&display_name=YouTube&url=https%3A%2F%2Fwww.youtube.com%2Fshorts%2Fa8SQPxR_teM%3Fsi%3DCS0XeDNf4kQBmvhU&image=https%3A%2F%2Fi.ytimg.com%2Fvi%2Fa8SQPxR_teM%2Fhq2.jpg&type=text%2Fhtml&schema=youtube

All these improvements mean the Citroën AMI can be driven entirely by our software, which is a huge milestone.

2. Why Citroën AMI?

Some might wonder why we chose the AMI as our test platform. The main advantage is that, despite being electric, it doesn’t have advanced built-in drive control electronics. We see this as an opportunity: if we can seamlessly integrate a drive-by-wire system into a simple, mechanically driven vehicle, we can likely do it for any car in a commercial fleet that lacks native computer control.

3. Camera Upgrades

After testing multiple camera modules (including Tier IV’s high-resolution options and the OV5940), we found that Tier IV cameras offer an excellent balance of resolution and stability for our needs.

- High dynamic range: Helps the system handle challenging lighting conditions.

- Reliability: Ensures consistent performance even in rapidly changing road environments.

4. HOS 2.0 VLM: Generating Realistic Driving Videos

On the algorithmic side, we’ve made strides with HOS 2.0 VLM (Vision-Language Model). This model builds on Flexible Diffusion Model (FDM) principles, allowing us to generate long, coherent driving videos from just a few key frames:

- Sequential Video Sampling: By conditioning on only a handful of frames at a time, the model can produce realistic driving sequences spanning hundreds of frames.

- Flexible & Scalable: The system can adapt to different driving scenarios, thanks to advanced diffusion processes that iteratively refine synthetic frames.

- Training on Waymo Open Dataset: By leveraging this comprehensive dataset, HOS 2.0 VLM learns to handle diverse environments and situations, improving its real-world applicability.

Ultimately, these capabilities will provide valuable insights into how autonomous vehicles interact with complex road scenarios and enable us to better interpret our AI’s decision-making process through descriptive text.

5. Looking Ahead

Our immediate priorities for January are:

- Complete Testing & Calibration

Now that the hardware is up and running, we need to ensure everything works under real-world conditions. This includes finalizing braking force, steering precision, and sensor calibration. - On-Road Trials

We’re targeting January 2025 to have the first self-driving Citroën AMI operational on the road. Initial trials will take place in a controlled area, allowing us to refine our software and hardware under close supervision. - Continuous Algorithmic Enhancements

Alongside hardware integration, our software and VLM components will be continuously improved. We’ll monitor how well the model handles new data, especially from Tier IV cameras, and adapt our approach as needed.

Final Thoughts

We’re proud of how far we’ve come — from building an advanced vision-based decision-making algorithm, HOS 2.0, to integrating a fully operational drive-by-wire system into the Citroën AMI. By combining robust hardware with cutting-edge generative video models, we’re positioning ourselves at the forefront of autonomous driving innovation.

Stay tuned for more updates as we move into on-road testing and continue refining our solution. Thank you for your ongoing support and confidence in our mission!

—

Have questions or feedback? Feel free to reach out at hello@osmosisai.co